Emotion Recognition

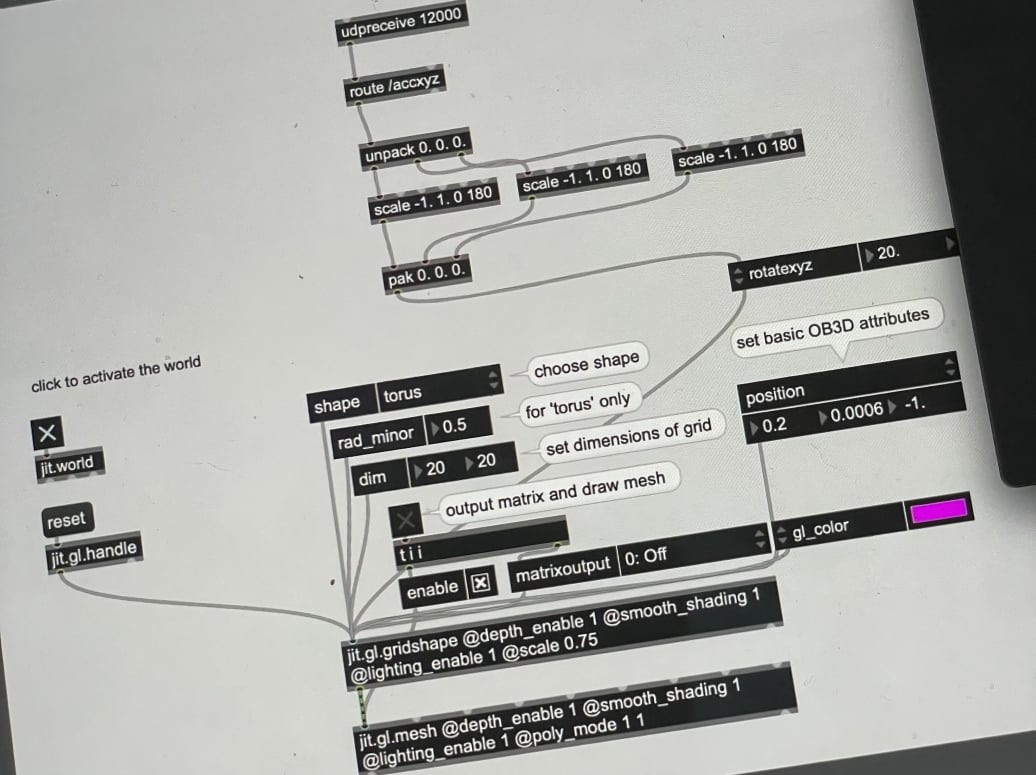

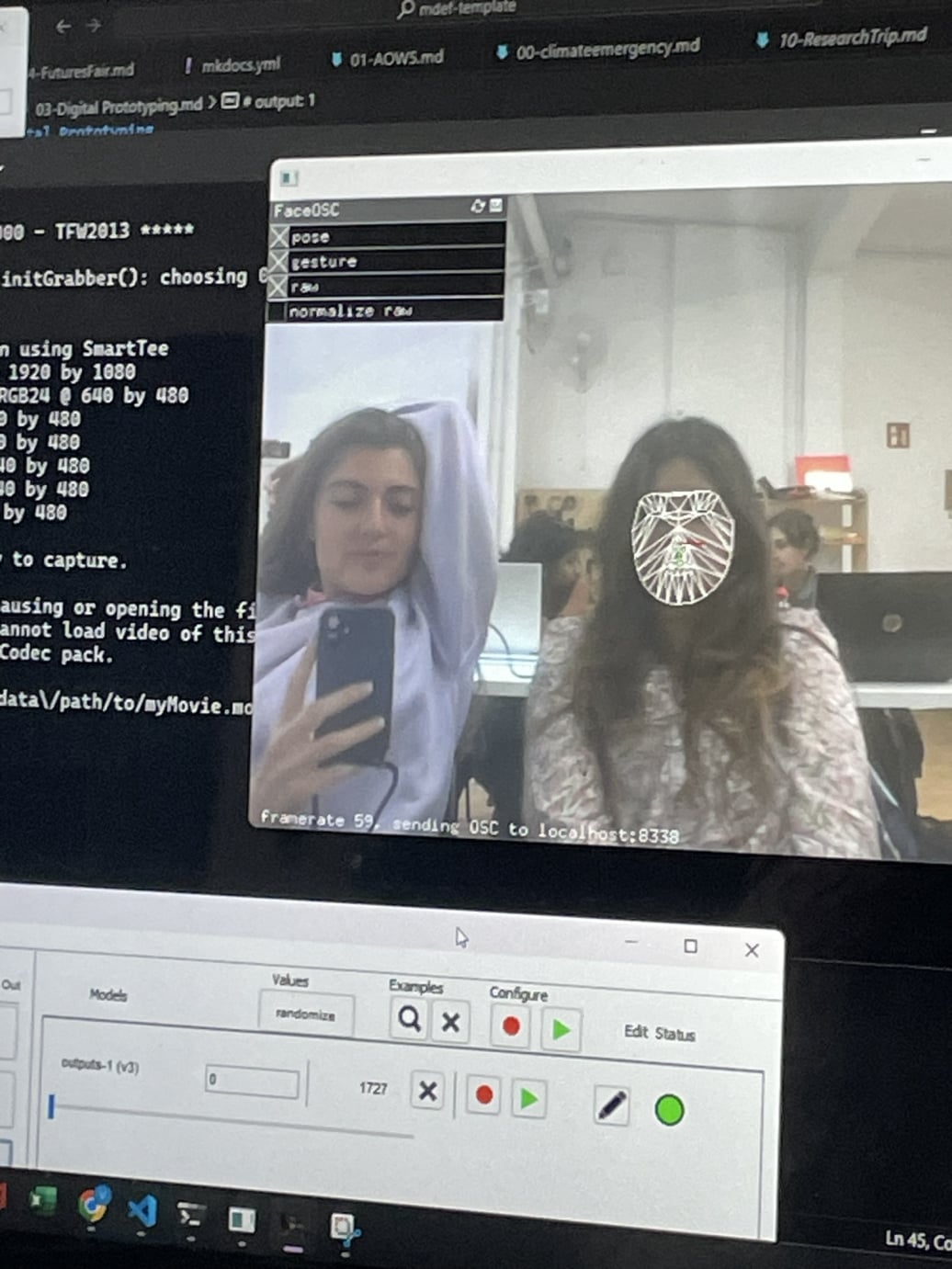

For this part of the task, we connected Wekinator, an AI training programme, with FaceOSC, a facial recognition model. Our task consisted in training Wekinator with a wide variety of facial expressions gathered with computer vision to recognise whether we were surprised or not. The output was transformed in a numerical value 0 = nothing, 1 = surprised, which we then used later on to link our facial expressions to a certain kind of output.