We then learned about the different layers behind an AI system:

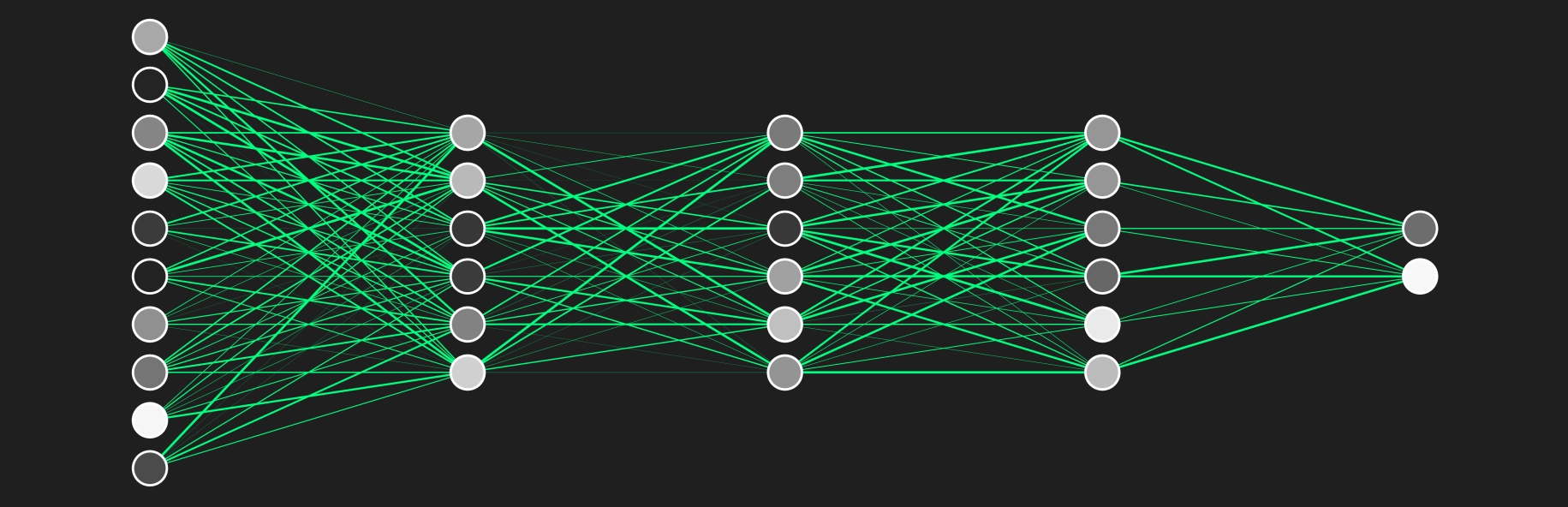

THE NEURAL NETWORK

A big mathematical structure specific to a task that is capable of self configuration from data. They are mostly written in Python and aren't programmes that we can install but pieces of software that we can integrate in programs. A lot of them are published as open source and can be found on GitHub.

THE DATASET

A sample of data used to train neural networks. It can be quantitative or qualitative and always contains bias, which will be present in the trained AI system. Websites with already existing datasets are: Kaggle, PapersWithCode.

THE LIBRARY

A Python library is a set of useful functionalities that you can reuse instead of coding them.

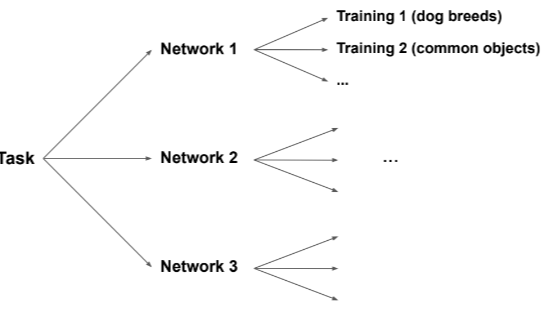

THE MODEL

The model is the trained neural network. As neural networks can be trained with different datasets, one neural network can be trained into different models. An image recognition neural network can be trained to recognise light, nature, faces or objects.