Now what?

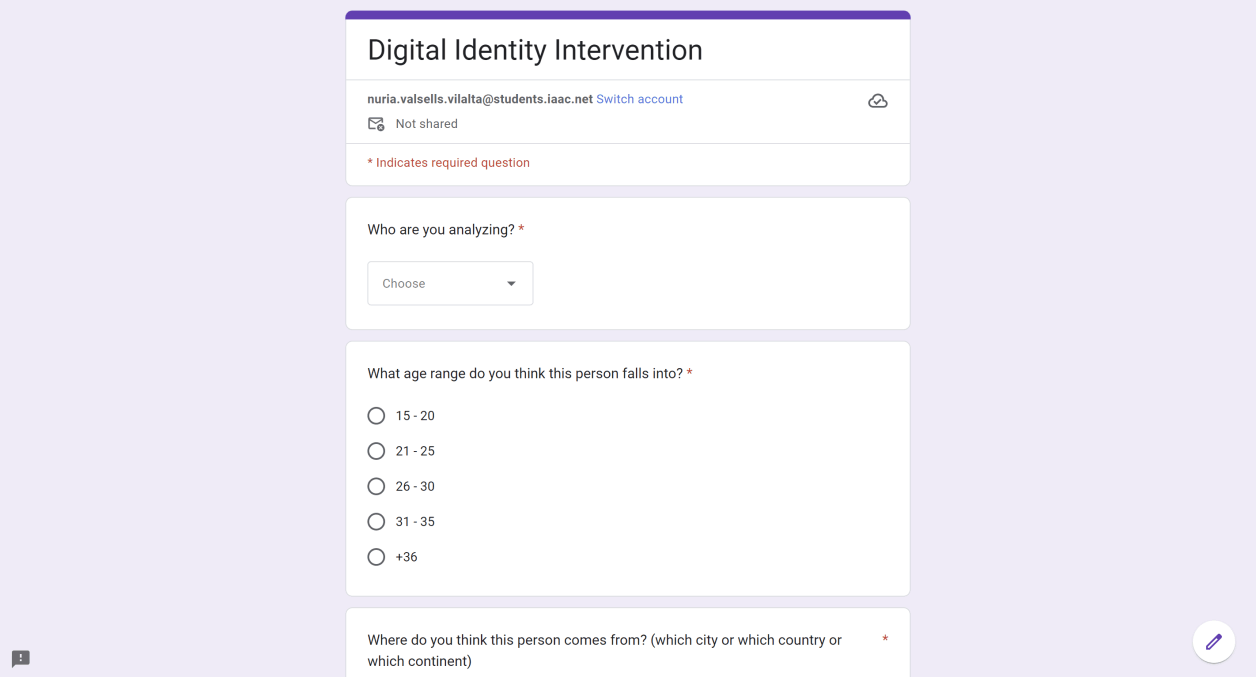

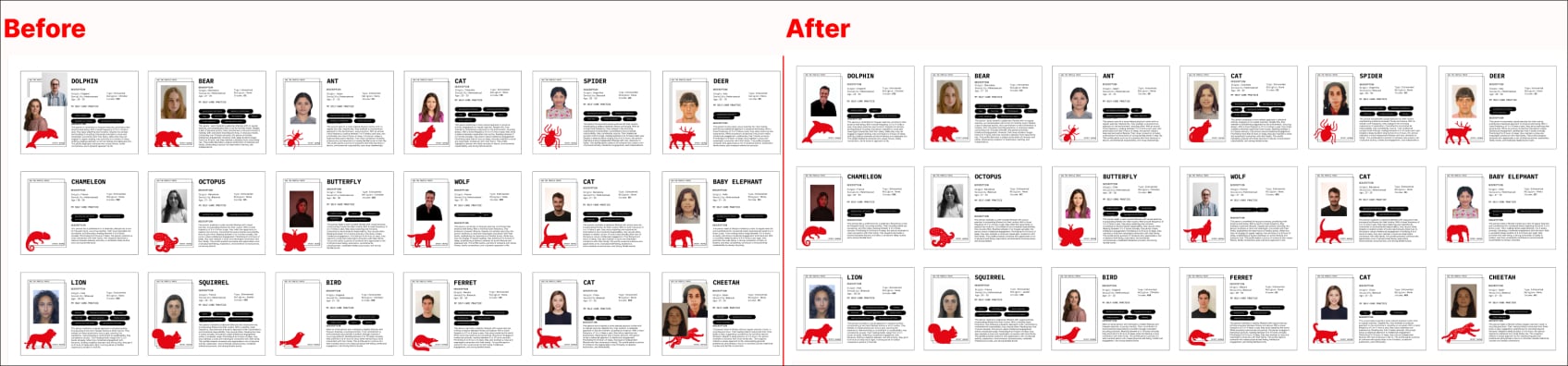

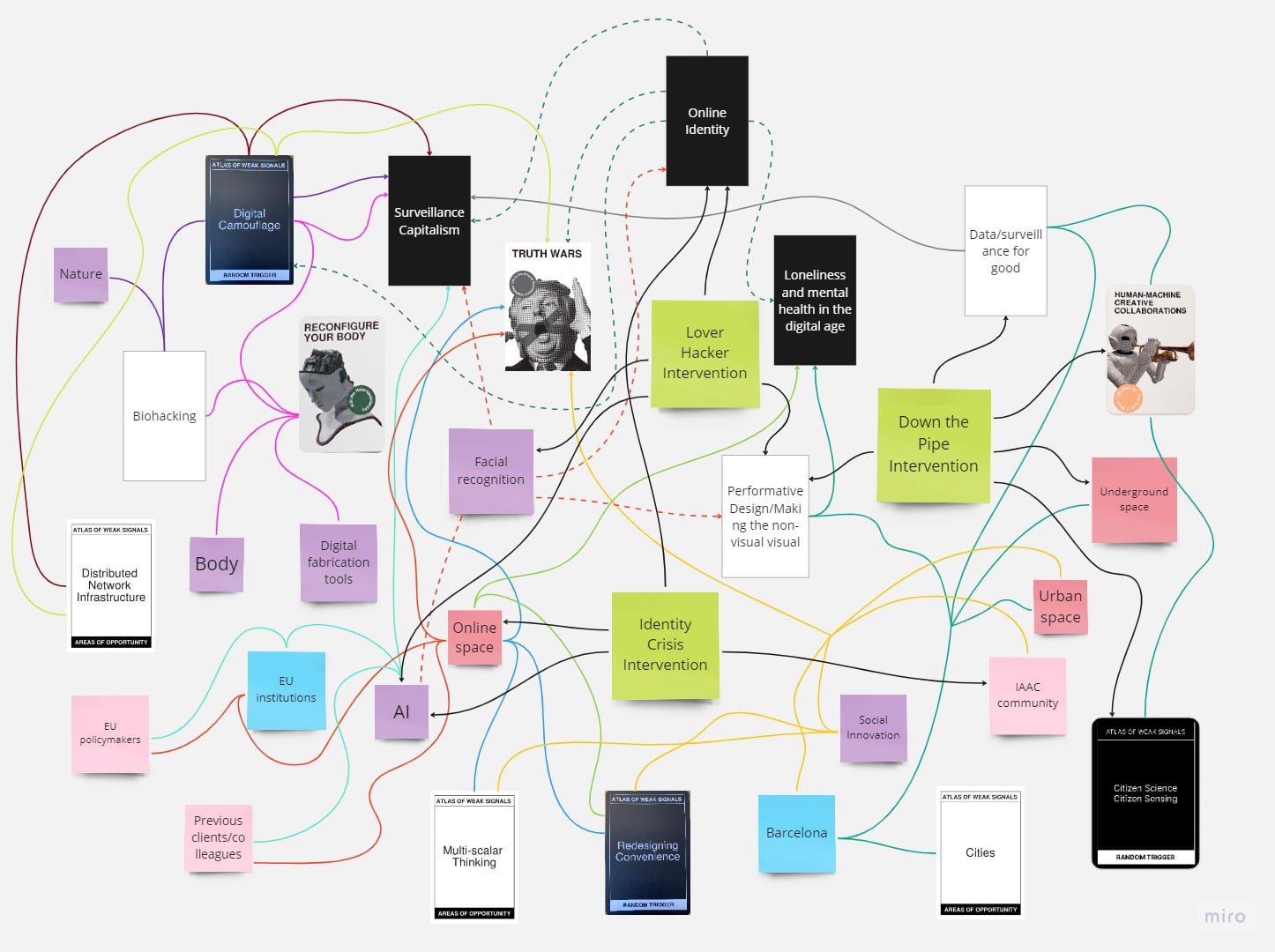

Moving away from my previous intervention, I decided to move closer to a topic of interest, online identity.

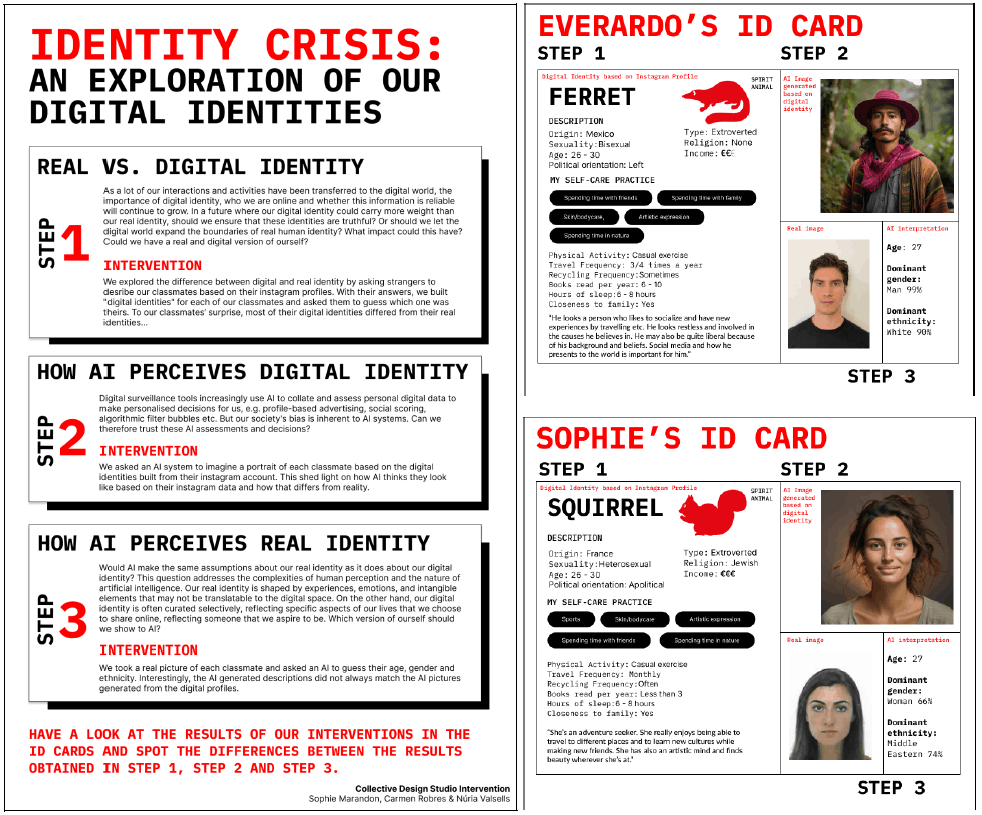

As a lot of our interactions and activities have been transferred to the online world, the importance of online identity, who we are online and whether this information is reliable, will continue to grow. In a future where our online identity could carry more weight than our real identity, should we ensure that these identities are truthful? Or should we be able to reinvent ourselves? Could the online world expand the boundaries of real human identities, which are limited in the physical world? What impact could this have?

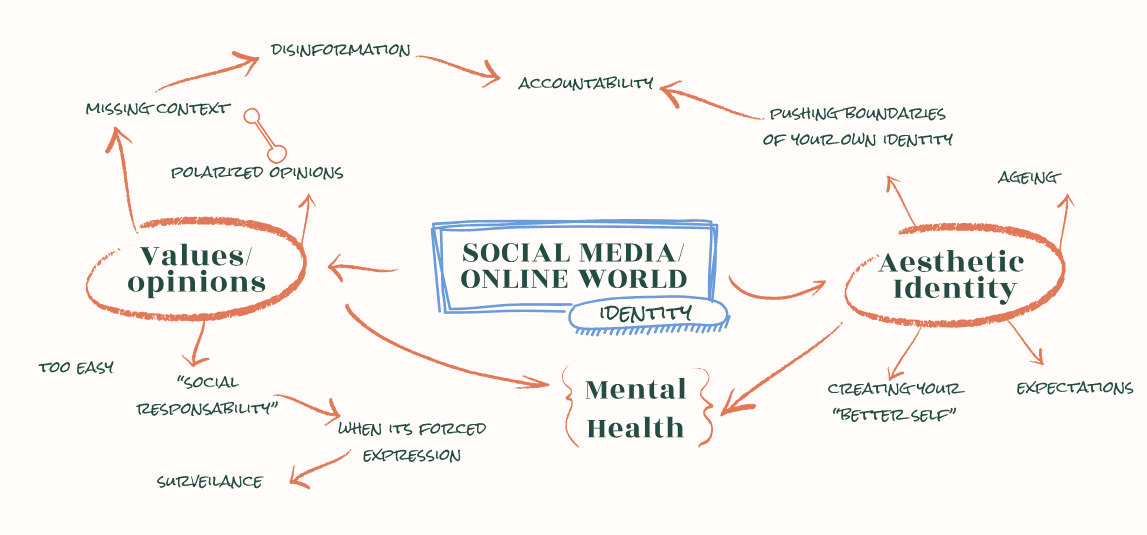

I joined Nuria and Carmen who were also interested in the concept of online identity. Before narrowing down a specific intervention, we discussed the multifaceted aspects of online identity, and how it can be linked to surveillance, disinformation and mental health, which are key themes in my personal design space.