What makes us human?

Intervention

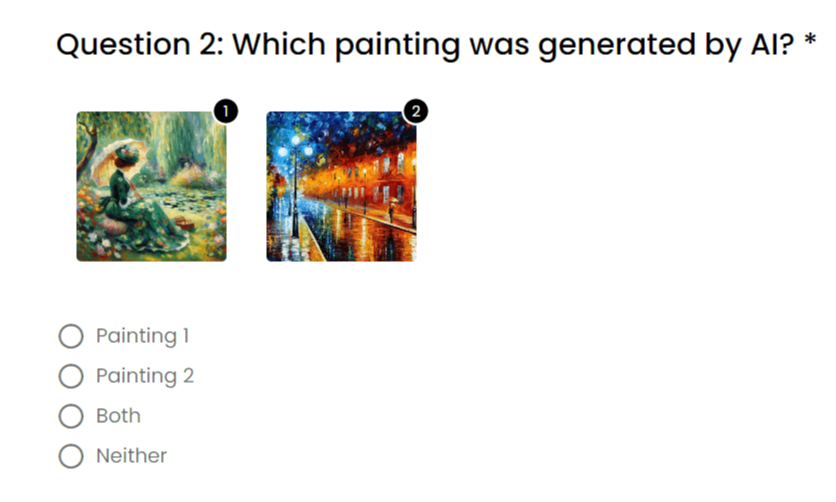

We got back to our initial reflection on the fact that we tend to trust technology more than ourselves. This led us to question what defines human identity and what defines AI identity and whether there is a difference between both and whether people can recognise that difference. So we created an intervention where people had to guess the content that was AI generated, from the content that was generated by humans.

We generated images, text and sounds with AI and collated the output in a survey. The AI models used for the content generation were ChatGPT, Dall-E and this Google Collab to generate voices.

Findings

We received about 30 answers to our survey, and the interesting finding was that most people could not tell the difference between what was human or AI generated! When asked about the rationale behind their choices, most respondents answered that AI content was such because it was "too perfect".

Reflections

This made me wonder about the boundary between human and machine intelligence. On one hand, it's kind of amazing that AI has advanced to the point where it can mimic human output so convincingly. But on the other hand, it raises questions about what makes human intelligence and creativity unique. This also shows that AI is not just about automating tasks anymore; it's about tapping into the essence of human creativity and expression. But at the same time, it's a reminder that as AI becomes more sophisticated, we need to be mindful of its implications.

AI now challenges our perceptions of authenticity and originality. If AI can replicate human output so effectively, what does that mean for the value we place on human creativity? And if people can't tell the difference between human and AI-generated content, does that diminish the significance of human contributions?

But perhaps most importantly, this result prompts us to reflect on what it means to be human. Despite AI's ability to mimic us, there's something inherently unique about human creativity and expression. Our imperfections, our emotions, our capacity for empathy – these are qualities that can't be replicated by machines. In fact, our survey showed that people tend to associate AI with perfection and truth, which links back to our reflection on why humans tend to trust technology more than humans.

The increasing integration of artificial intelligence (AI) into various aspects of life raises concerns about a possible path towards societal homogenization, as AI's tendency to produce the most optimised output could be detrimental to humankind's individuality and diversity. AI holds the risk of reinforcing a "winner-takes-all" mentality and existing biases as it relies on mathematical tools to minimise errors and anomalies... but anomalies are normal among humans! So how do we make sure that we maintain our individuality, our humanity as we increasingly rely on AI in our daily lives?